New HPC Cluster: Iris

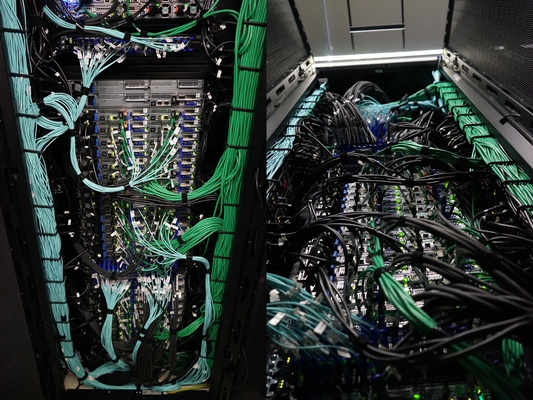

It was a long and tremendous work, delayed by many external factors, yet the iris cluster, part of the High Performance Computing (HPC) facility of the University of Luxembourg (see http://hpc.uni.lu) is now released.

The hardware characteristics of the cluster are as follows:

- Computing Capacity: 100 nodes, 2800 cores, i.e. 107.52 TFlops

- Dell C6320, Intel Xeon E5-2680v4@2.4 GHz [2x14c]

- 128 GB RAM each

- Interconnect:

- 10/40GB Ethernet network

- Infiniband EDR 100Gb/s with non-blocking/Fat-Tree Topology

Update (2018-2019): 68 new regular nodes, 24 GPU nodes (each featuring 4 tesla V100 cards ) and 4 Large Memory nodes were added.

- Storage Capacity (based on SpectrumsScale GPFS): 1440 TB raw

- DDN GridScaler

- GS7K base encl. + 3 SS8460 expansio

- 248 disks (240x 6TB SED + 8 SSD)

In terms of software, we made a couple of drastic changes compared to the other cluster. These choices have been dictated to facilite the opening of the UL HPC to externals, as well as the native support of container jobs based on Shifter.

Credits for the pictures: Hyacinthe Cartiaux, Valentin Plugaru

I’ve run a couple of benchmarks assessing the effective performances of this new cluster (when over 100 nodes).

- performances of the network have been measured using OSU Micro-Benchmarks .

- computing performances have been measured using HPL - High-Performance Linpack, the reference benchmark used for the Top 500 list.